Search Engine Optimization once reigned supreme. It emerged during the Altavista boom of the mid-to-late 1990s, an age when ranking first for a handful of keywords could feel like striking digital gold. Before Google reshaped discovery, SEO was the shortcut to visibility, relevance, and free traffic. Marketers engineered pages to please algorithms, and algorithms rewarded them with clicks.

That world no longer exists.

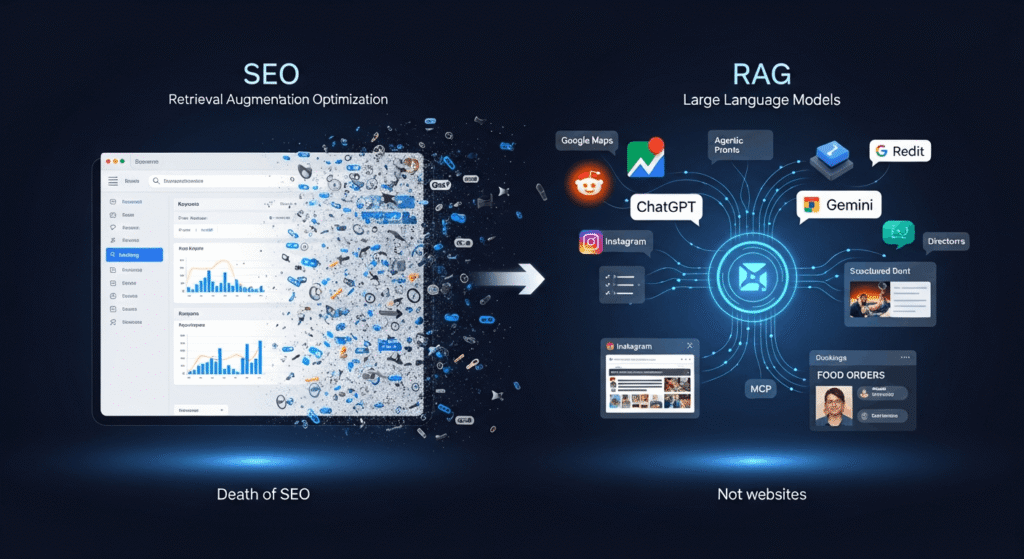

With the rise of ChatGPT and Google Gemini, traditional search has quietly flatlined. Typing fragmented queries into a search box is fading into habit, replaced by conversational prompts and intent-rich questions. SEO didn’t evolve. It stalled. What replaces it is RAO — Retrieval Augmentation Optimization.

The so-called “Internet Rockstar” version of SEO isn’t just obsolete; it’s fossilized. The machines didn’t assist it. They replaced it.

People no longer interrogate search engines. They converse with Large Language Models. These models operate through a mechanism known as RAG — Retrieval Augmented Generation. At its core lies Retrieval Augmentation (RA): a process where live information is pulled from the web and injected directly into the model’s reasoning process. Keywords, as humans once understood them, are irrelevant now. Agentic signals dominate instead.

How Retrieval Augmentation Actually Functions

Ask an LLM something precise, like where to find Döner Kebab in Bonn after 11 p.m. on a weekend, and it doesn’t guess. It fans out across the internet, collecting fragments of verified data from multiple sources. That act of intelligent scavenging is Retrieval Augmentation.

Retrieval Augmented Generation goes a step further. First, the model evaluates whether your question demands external research. If yes, it constructs a structured retrieval plan — deciding which sources matter, how to query them, and how to reconcile inconsistencies. Gemini has an advantage here, with Google’s ecosystem woven directly into its core. Once the structure is defined, the model executes the retrieval with surgical precision.

The harvested data is then filtered, dissected, and merged with the original prompt. The result is not an answer based on stale training data, but one grounded in current, contextual reality. RAG was designed to eliminate knowledge gaps and inject real-time awareness into LLMs.

From a business perspective, the implication is blunt: you must exist where prompts look. Visibility is no longer about ranking; it’s about being retrieved. If someone asks for late-night pizza or a kebab shop that meets specific criteria, your business must surface naturally in that response. That is the purpose of RAO.

RAO Done Properly

Calling RAO “SEO for robots” is a half-truth at best. SEO revolved around optimizing pages for keywords and hoping users would click. RAO bypasses that entire funnel. LLMs do not rely on a single query or a shortlist of results. They triangulate.

Ask ChatGPT or Gemini how they research a business, and they will openly describe their methodology. They enumerate sources, explain query variations, and reveal how trust is distributed across datasets. Rankings and backlinks carry minimal weight. Long-tail intent dominates. Consistency across sources matters more than dominance on one platform.

Your business must be referenced across a broad spectrum of trusted locations. Information must be complete, synchronized, and current everywhere. Conflicting data is poison for RAO. Outdated details are worse.

Consider a restaurant with mismatched menus across maps, directories, and delivery apps. The model won’t negotiate ambiguity. It will exclude you. The same applies to opening hours, payment methods, or safety-related metadata. A prompt like “Which kebab shop nearby is open after 11 p.m., accepts Amex, and feels safe for women?” triggers a cascade of granular searches. Missing data means elimination.

Practical RAO Strategies That Actually Work

RAO demands a wider lens than SEO ever did. It starts with understanding customer demand, not search intent, and ensuring that every required data point exists across the web.

Your business should appear on at least 10–15 independent platforms — news sites, community hubs, niche directories — without relying on backlinks. Google Maps and business directories must be immaculate: hours, payments, menus, services, all filled to completion. Treat Google Maps as the baseline; mirror that completeness everywhere else.

X, Instagram, and Reddit are non-negotiable. These platforms are not merely social networks; they are training grounds and verification layers for LLMs. Reddit and X, in particular, heavily influence retrieval chains. Organic mentions in subreddits or threads can quietly propel your business into model recommendations.

Use LLMs themselves as diagnostic tools. Ask them how they would respond to specific customer scenarios related to your offerings. Then ensure your business is present in the sources they reference.

Local credibility has never mattered more. Region, proximity, and perceived legitimacy all influence retrieval. Anything that looks inconsistent or dubious risks being filtered by security layers before it ever reaches generation.

RAO analytics tools barely exist. Adoption has outpaced tooling. The current best practice is manual: maintain a living document of customer personas, their demand scenarios, and the prompts they might use. Regularly test whether your business appears. Website traffic has become a vanity metric. Users increasingly trust the model’s answer over a click.

Social platforms have shifted roles. They are no longer just trust signals; they are information validators. Frequent posting and genuine participation signal that your business is alive, operational, and relevant. On video platforms like YouTube, Instagram, and TikTok, text descriptions matter more than visuals. LLMs read those descriptions. If you announce extended hours or a weekend offer, it must exist in text, or it effectively never happened.

Public MCP Will Eclipse Websites

Websites are already losing relevance. Most businesses struggle with basic UX, and LLMs are indifferent to poorly designed pages. Websites will linger, but their influence is waning. The Model Context Protocol (MCP) allows LLMs to perform actions, not just provide answers.

Soon, ordering food, booking appointments, or completing payments will happen directly through models. Your systems must be MCP-compatible. This isn’t a decade away. It’s approaching at sprint speed.

RAO is the on-ramp to MCP-driven conversions. Searching a website to buy something is becoming an anomaly. The final “buy” action is the last manual step, and MCP is poised to absorb it. Standards are forming. The shift is underway.

As with most AI-driven change, the future arrives abruptly. Platforms like Booking.com or Uber Eats will gradually lose gravitational pull. Optimizing your own website for conversions is increasingly irrelevant. The real question is whether your business is prepared for revenue optimization through LLMs using RAO and MCP.

Looking Ahead

The moment your leadership started debating SEO, it was already obsolete. Search engines exhausted their novelty long ago. RAO is just beginning its ascent. Google’s search experience is steadily being absorbed by Gemini, and websites are sliding into the background.

LLMs now live everywhere — phones, cars, televisions, dashboards. A driver using Android Automotive won’t browse your site to order pizza. They’ll speak to Gemini, which will execute the transaction via MCP. Google will likely monetize that privilege.

Resistance is futile. Letters, protests, or denial won’t reverse the trajectory. This isn’t speculative futurism. It’s happening now. Bookstores didn’t vanish because Amazon was cheaper; they vanished because Amazon was frictionless. Don’t repeat that mistake.

Adopt RAO. Prepare for MCP. Build for a world where business is conducted by models, not browsers.